Beijing, China

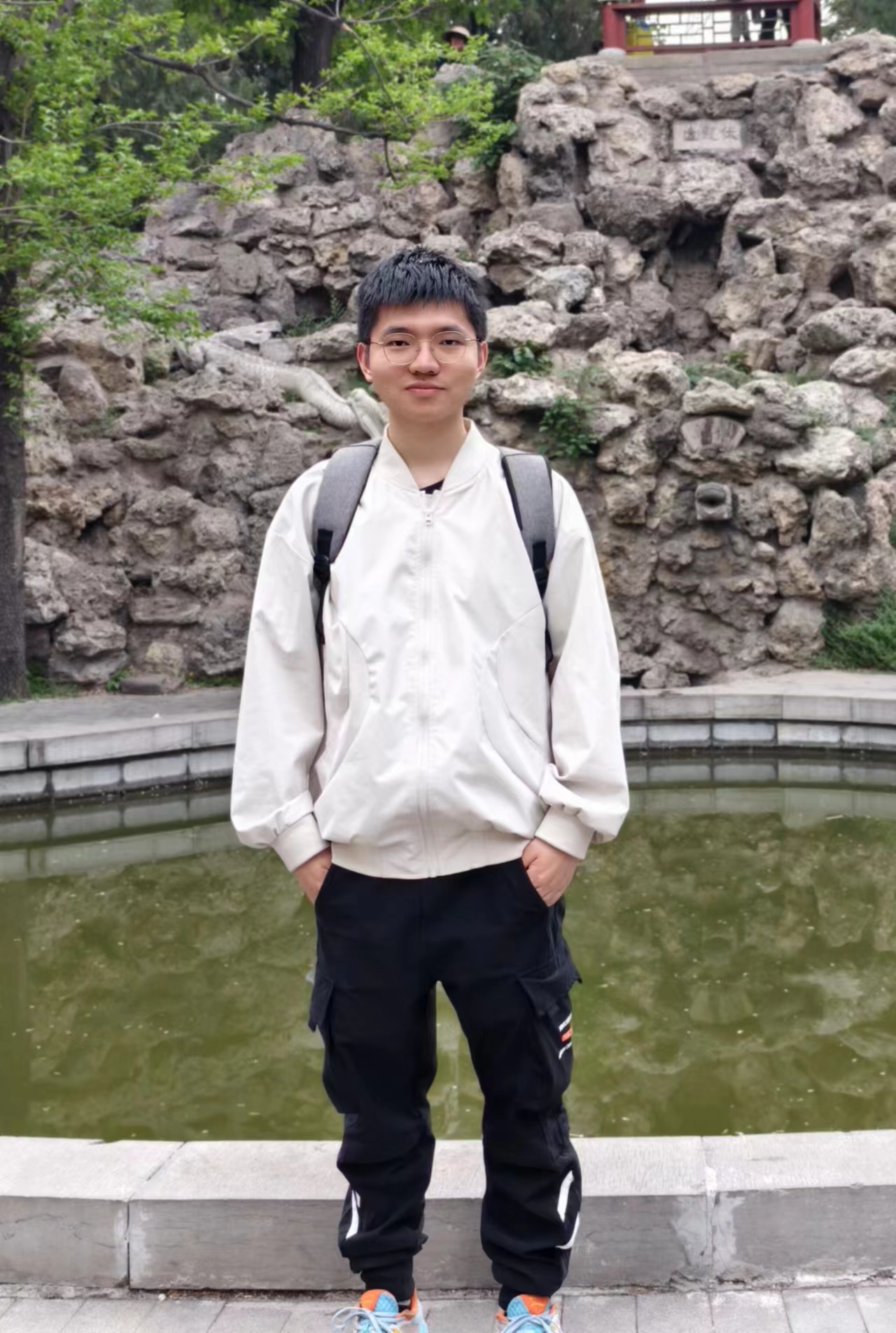

Ye Tian

Deep Learning · Diffusion Models · Multimodal LLM

I’m Ye Tian (田野), a first-year Ph.D. student at School of Intelligence Science and Technology, Peking University, advised by Prof. Yunhai Tong. My academic journey at Peking University also includes completing my bachelor’s and master’s degrees in Computer Science at the School of Computer Science and Electronic Engineering, where I had the privilege of collaborating closely with Prof. Bin Cui and Ph.D. Ling Yang.

I’ve worked as a research intern at Kling, Kuaishou, Pixverse and Bytedance. My research interests mainly focus on AI-Generated Visual Content, multi-modal LLM, and unified models. I’m currently working on diffusion-based language models, and I’m always open to academic or industry collaborations; please feel free to reach out at tyfeld@gmail.com.

news

| Jan 27, 2026 | MMaDA-Parallel is accepted to ICLR 2026. |

|---|---|

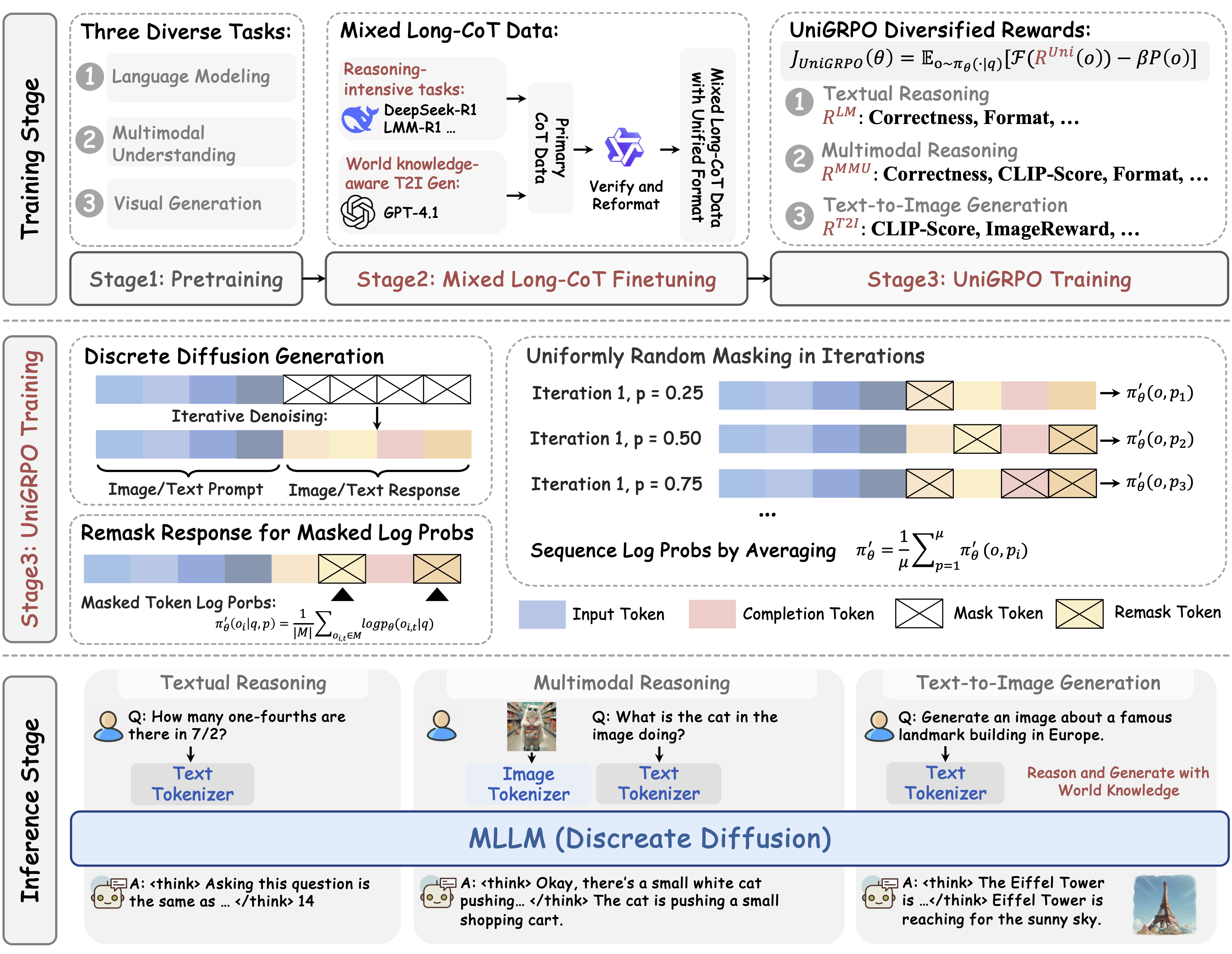

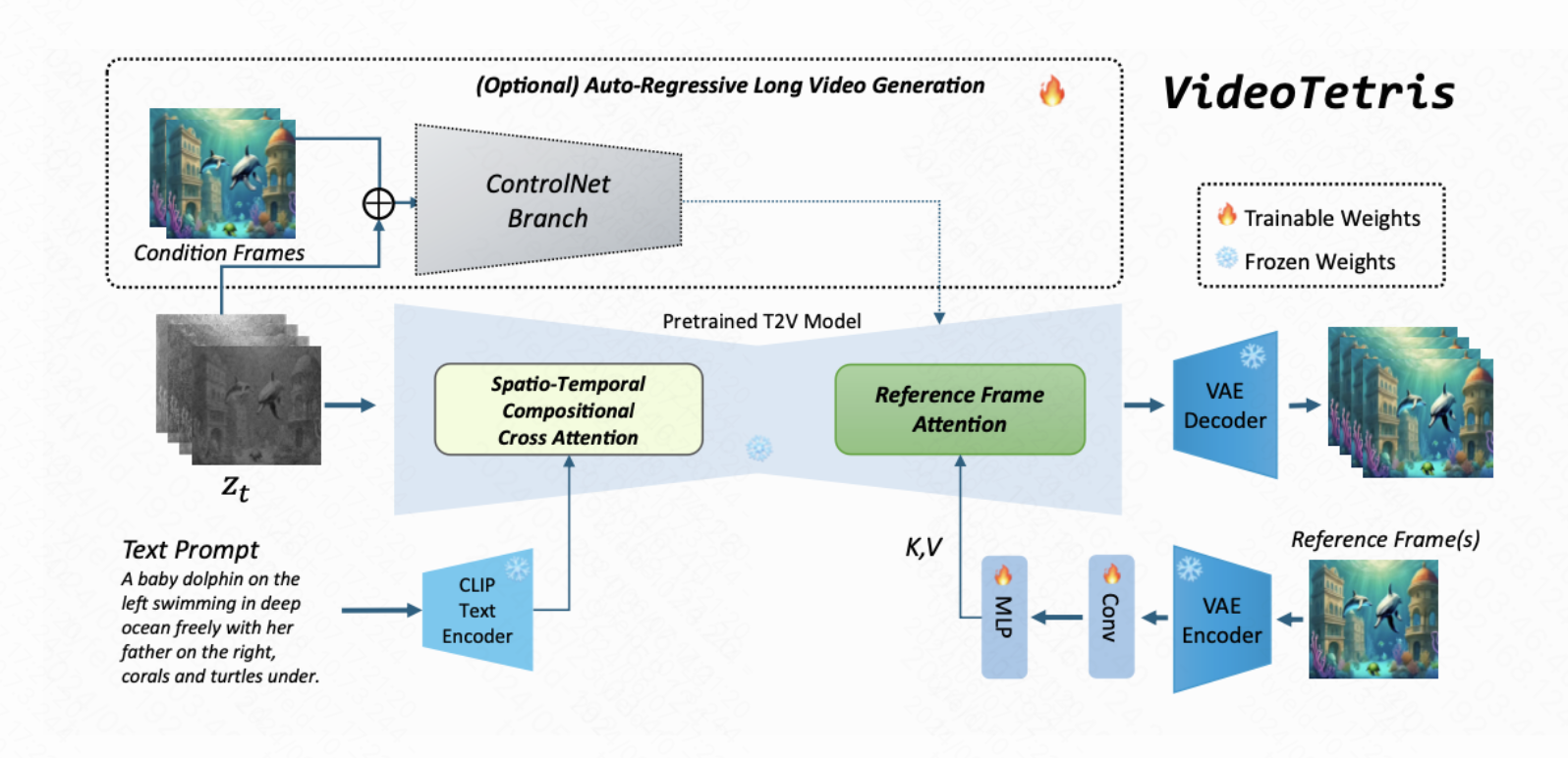

| Nov 18, 2025 | I developed a parallel generation framework MMaDA-Parallel for thinking-aware editing and generation. Check out the project page for more details. |

| Sep 19, 2025 | MMaDA is accepted by NeurIPS 2025. |

| May 21, 2025 | MMaDA is released, and I serve as the core code contributor. We built a novel unified multimodal understanding and generation model, purely with a discrete diffusion backbone. |